Introduction

Computers, ranging from individual handheld devices to the scale of all the computers in the Internet, are inherently concurrent devices that perform multiple tasks simultaneously.

For example, considering your handheld device, you may be simultaneously listening to music and receiving e-mails on the device, while reading this text with the web browser. In fact, even the web browser is quite happy to accept your input while it is simultaneously loading data from the web and rendering that data on the display as it becomes available.

Our objective in this round is to initiate you to the basic concepts and principles of concurrency, in programming terms.

A single thread of execution

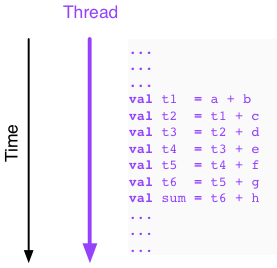

Before starting to think about concurrency, let us review our current view of programming and program execution. For pedagogical purposes we have until now intentionally viewed programming as an activity without concurrency.

With such a restricted view, program execution consists simply of the

execution of a sequence of elementary operations. For example, the

execution of the following sequence computes the sum of the eight values

a, b, …, h:

val t1 = a + b

val t2 = t1 + c

val t3 = t2 + d

val t4 = t3 + e

val t5 = t4 + f

val t6 = t5 + g

val sum = t6 + h

What is above is of course a very simple program without loops or other conditional constructs, but the same principle stands for all programs without concurrency: program execution consists of a single sequence of executed instructions. In other words, program execution follows a single thread of execution or thread.

Programs that employ only a single thread of execution are called single-threaded or sequential programs.

Multiple threads

A multithreaded program employs multiple threads that execute in parallel to improve its performance.

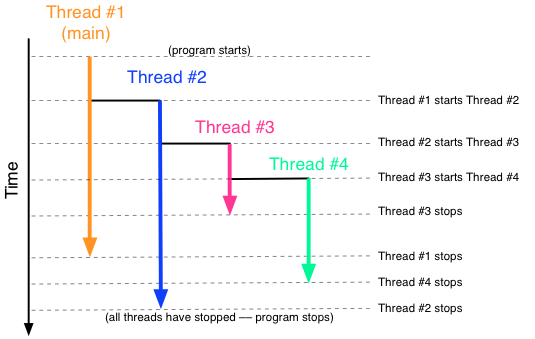

A multithreaded program starts with a single thread of execution, the main thread, which can then start one or more new threads, each of which in turn can start new threads, and so forth. The program stops when all of its threads have stopped.

Why concurrency and multithreading?

Concurrent, multithreaded programs are useful for a number of reasons:

Parallel speedup. To utilise the full potential of the hardware in computationally intensive applications such as scientific computing or graphics, a program must make intelligent use of the hardware capabilities for parallel execution. In practice this means careful use of multiple threads, or other higher-level abstractions for concurrency. For example, in graphics programming it is common to work with groups of threads that each execute the same instruction on multiple independent data items, thus giving a speedup over sequential programming that is proportional to the number of independent data items that are processed in parallel. Similarly, multiple independent resources can be accessed in parallel, for example, when loading data from multiple sources on the web.

Throughput. Not all sequential computations admit parallel speedup, in particular because the next operation in a sequence of operations may depend on the outcome of the current operation, and hence the next operation cannot be started before the result of the current operation is available. Yet, the capability for parallel execution can be used to improve throughput in applications that execute multiple independent sequences of such operations. For example, a program that implements a web service typically receives multiple requests, each of which must be serviced with a sequence of dependent operations (e.g. parse request from client, load relevant data from database, filter and summarise loaded data, format and transmit summary to client), but the requests themselves are independent of each other and can be executed in parallel. In this case parallel execution does not decrease the time to complete any individual request, but the throughput in requests serviced per time unit is increased.

Availability. Many applications require availability and low response times from a program. For example, a program implementing a web service should respond immediately with an acknowledgement that a request has been received even if there are many other requests currently being processed and it will take time to complete the request. Similarly, most user programs should remain responsive to user input even if the program is simultaneously loading input from multiple sources and/or writing output. A good example in this regard is a web browser that is loading multiple resources (text, images, video, audio) simultaneously from the web and rendering them on output devices as they arrive, while at the same time accepting user input from the keyboard and via the graphical user interface.

Easy parallelism

Our intent in this round is to focus on what can perhaps be described as easy parallelism.

That is, our intent is to benefit from parallelism and multithreading using high-level programming abstractions that enable us to (almost completely) relegate the low-level thread-management and synchronization to library code.

In programming terms we only want to indicate (specify) that certain parts of our program can be executed in parallel. The nitty-gritty details (the implementation) of the parallel execution is then relegated to an underlying execution service.

Concurrency

In essence, a concurrent program is a program that has the ability to execute parts of its code in parallel. If and how this happens can be decided by other parts of the system, such as a scheduler or resource pool.

We will start by looking at an example of multithreading in

Scala through the Runnable trait. By playing with it in the

console we will also begin to get an idea of the basics of

concurrent programs.

Then we will discuss some of the the key concepts and principles related to parallelism. The most important concept in this regard is dependence between steps of a sequential computation.

Once we have the basic principles available, we are ready to look at some convenient high-level programming abstractions available in Scala that enable us to easily benefit from parallelism.