Introduction

Computer programming is laborious, but comes with the benefit that once our program is ready, the machine can take over, and do the remaining work for us, at a peak rate of billions of instructions per second from every processor core employed for the task.

In Module II: Programming abstractions and analysis and Module III: Frontiers we have studied programming abstractions intended to free our mind from nitty-gritty details and to enable the pursuit of higher-level goals.

In this round our intent is to introduce a yet further family of tools/abstractions in our quest for automation. Yet again this family of tools is intended to free us as programmers from putting conscious effort into nitty-gritty details. In fact, our intent is to let the machine learn the details. That is, perhaps we could teach the machine instead of programming it?

What is teaching? What is learning?

Let us reflect a little bit on teaching and learning, with the objective of contrasting these notions with programming as we have studied thus far.

Courtesy of our prior education and our role as students/teachers in gaining/facilitating such an education, we of course have a fairly tangible grasp of the concepts teaching and learning, together with the roles of the teacher and the learner (that is, the student) associated with these concepts.

Let us start with an observation that ought to signal to us the serendipity of learning, from a programming perspective.

Learning requires effort, from the learner.

A teacher can ease this effort, for example, by carefully preparing the material that is being taught so that it is easy, or at least in some sense easier, to learn. But yet it is arguably the case that no learning takes place unless the learner is willing to put in some effort.

So why should we, as programmers, be excited about an everyday observation that learning requires effort?

As programmers we of course care a great deal about how much we should put in human effort into getting a task automated. The less human effort, the better.

If we consider programming in the classical sense (as we have done thus far in the course), then essentially all the hard work is on the side of the programmer. Our program must be a perfect, unequivocal description of how to accomplish the task that we want to automate. The computer will then execute our program and will get the job done, but only after we have put in the effort to make a perfect description of what needs to be done.

Ideally, we would like to put in less human effort, give a less-than-perfect description of the task to the computer, and let the machine figure it out. In other words, as programmers we would like to teach (or train) the machine instead of programming it. The machine needs to put in effort to learn, but this effort is compensated by less human effort invested in programming. At least this is the goal that we want to achieve as programmers.

The good news is that this goal is attainable in many contexts, and is becoming increasingly attainable because of increasing amounts of data being available for training purposes.

Ideally we would like to just throw data at the computer and let it learn, all by itself, without our intervention as programmers. In practice of course it does take programming effort to clean and prepare the data to facilitate learning. Also the learning process itself requires algorithms (that we as programmers must implement) that adapt to and automatically discover relevant regularities in the data. Yet the total human effort required is ideally far less than what would be required in terms of preparing a perfect, unequivocal description of the task to be automated in classical terms.

Generalisation from presented examples – a roadmap for this round

This round initiates you to machine learning through a brief study of generalisation from examples.

To adopt a more concrete perspective (which will be further elaborated in programming terms in the exercises), let us try to teach the machine how to see.

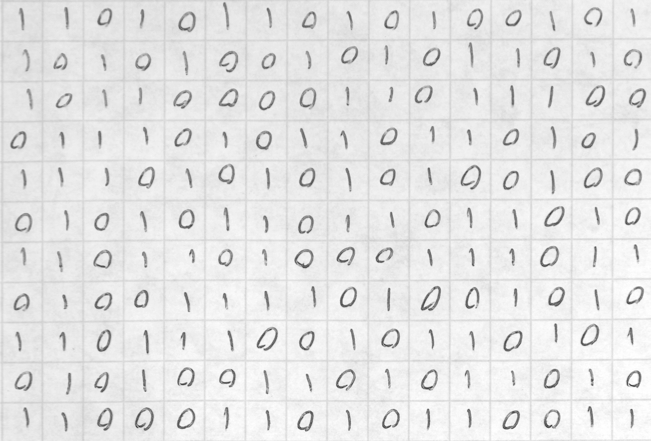

For example, think about the task of reading the handwritten binary digits from the following scanner output:

To a human identifying between handwritten zeroes and ones is of course an effortless task, if we put our mind to it.

But how do we give a computer this ability to read, that is, how do we train the computer to identify between handwritten zeroes and ones?

What you should observe immediately is that no two handwritten zeroes (or no two handwritten ones) are precisely the same. Furthermore, the scanner output has noise in it, and the exposure varies (some squares are systematically darker/lighter than others) in the output. And so forth. All in all, it appears like a somewhat taxing task to program the machine to distinguish between handwritten zeroes and ones. That is, if we want to program a solution, we have to tell the machine precisely what it is that makes a zero a zero and a one a one. A zero is “round” and a one is “vertical”, say. But how do we program “round” and “vertical” ?

Our intent is to have the machine learn to distinguish between zeroes and ones instead, by the process of generalisation from presented examples. That is, our intent is to show the machine examples of zeroes and ones, after which the machine will by itself learn to tell the difference. (Of course, we will supply some assistance in the process. That is, we act as teachers.)

Once we understand the basic concepts, we continue with a brief discussion of risks and rewards of using machine-learning techniques to assist us as programmers.